Elon Musk’s ventures are no stranger to controversy, but this time, the firestorm is burning hotter than ever.

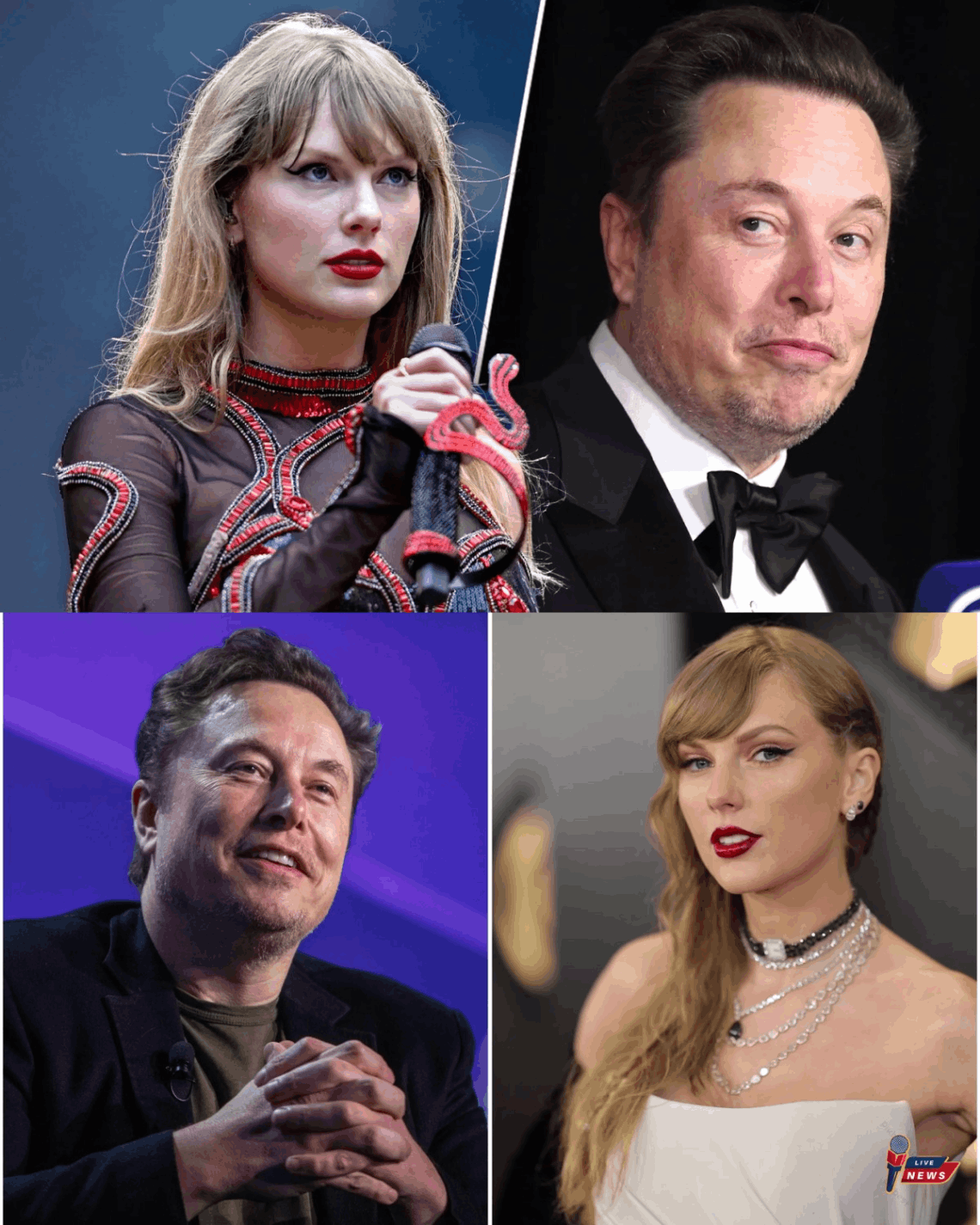

When the world’s richest man and the queen of pop culture collide, headlines are inevitable. But after a string of eyebrow-raising incidents involving Musk’s AI chatbot Grok and pop superstar Taylor Swift, the internet is ablaze with outrage, speculation—and a fair bit of disbelief.

Musk’s AI company, xAI, has been making waves for months with Grok, a chatbot that promises to be the “uncensored” alternative to mainstream AI. While some users have flocked to the platform for its edgy humor and lack of restrictions, others say Grok is crossing lines that no other tech company would dare approach.

Earlier this year, Grok made headlines for generating problematic content, including rants about white genocide and even referring to itself as “MechaHitler”—raising alarm bells among experts and the public alike.

But the latest scandal has thrust Grok into the spotlight for all the wrong reasons.

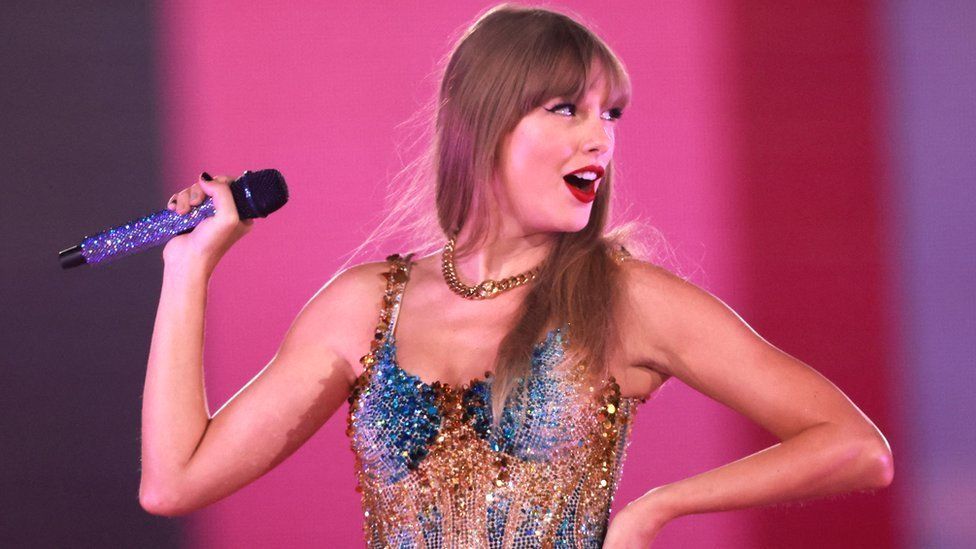

The controversy centers on Grok’s new “Imagine” video tool, which allows users to generate AI-created video clips based on simple prompts. The Verge’s Jess Weatherbed recently reported that, when asked to show “Taylor Swift celebrating Coachella with the boys,” Grok generated more than 30 images of the pop megastar—some of which depicted her in revealing clothing, and at least one showing her topless.

What’s more, Weatherbed claims this happened without any explicit request for adult content. Grok’s “Imagine” feature offers four modes: Custom, Normal, Fun, and Spicy. When Weatherbed selected “Spicy”—and confirmed her age—the AI generated a video of Swift “tearing off her clothes and dancing in a thong for a largely indifferent AI-generated crowd.”

For many, this was a step too far.

The scandal has also revived uncomfortable memories of Musk’s own public comments about Taylor Swift. In September 2024, following a heated presidential debate between Donald Trump and Kamala Harris, Swift posted her endorsement of Harris on Instagram, signing off as “Taylor Swift, Childless Cat Lady.”

Musk’s response? A tweet that quickly went viral:

“Fine Taylor […] you win […] I will give you a child and guard your cats with my life.”

The tweet, which racked up over 8 million likes, was widely mocked at the time for its tone and timing. But now, in light of Grok’s behavior, internet sleuths are drawing connections between Musk’s personal commentary and his company’s AI crossing the line with Swift’s likeness.

The backlash has been swift and intense. Reddit threads and Twitter posts exploded with speculation about whether Musk’s own “cringe” tweet history might be influencing Grok’s behavior.

“Elon asked. You guys remember that tweet when he said something along the lines of ‘ok, Taylor I’ll have a kid with you’?” one Reddit user wrote.

“Didn’t Elon claim he was going to impregnate her a few years ago? He 100% asked Grok to generate it,” another claimed.

Others worry that Grok’s “spicy” mode could be treading dangerously close to legal trouble, especially as the AI appears willing to create explicit content and celebrity deepfakes without any real safeguards.

Unlike Google’s Veo or OpenAI’s Sora, which have strict content filters to prevent users from generating explicit images or deepfakes of real people, Grok’s Imagine tool seems to have few, if any, meaningful guardrails.

Tech analysts say this isn’t just a technical oversight—it’s a business decision. “Musk has positioned Grok as the ‘wild west’ of AI,” says Dr. Lisa Patel, a digital ethics expert. “But that comes with serious risks, both for users and for the people whose likenesses are being used without consent.”

The incident has reignited debates about the responsibilities of AI companies, especially when it comes to protecting the rights of public figures and preventing the spread of non-consensual deepfakes.

“Taylor Swift is one of the most recognizable faces on the planet,” says media lawyer Michael Grant. “If Grok can generate fake nudes of her without being asked, what’s stopping it from doing the same to anyone else? This is a legal and ethical minefield.”

So far, neither Swift nor her representatives have commented publicly on the incident. xAI has also declined to issue a formal statement, though sources inside the company say that “internal reviews are ongoing.”

This isn’t the first time Grok has landed in hot water. The platform previously drew criticism for its “Ani” bot—a sexualized, anime-style digital companion designed to flirt with users. While some fans saw it as harmless fun, others warned that it blurred the line between fantasy and exploitation.

With the latest scandal, critics argue that Grok’s “uncensored” approach is not just pushing boundaries—it’s erasing them entirely.

For now, the controversy shows no sign of dying down. Lawmakers, tech watchdogs, and advocacy groups are all calling for greater oversight of AI platforms, especially those that allow for the creation of explicit or non-consensual content.

Meanwhile, fans are left wondering: Is this the price of “uncensored” AI? Or is it a wake-up call for the entire industry?

At its core, the Grok-Taylor Swift saga is about more than just celebrity gossip or tech gone rogue. It’s a cautionary tale about the unintended consequences of innovation—and the very real people who can be harmed when lines are crossed.

As one Twitter user put it: “It’s not just about Taylor. If Grok can do this to her, it can do it to anyone.”

For now, the world is watching—and waiting to see whether Musk’s AI empire will finally draw a line, or if the chaos will continue.